TikTok made waves this summer season when its CEO Kevin Mayer introduced on the corporate’s weblog that the corporate can be releasing its algorithms to regulators and referred to as on different corporations to do the identical. Mayer described this choice as a method to offer “peace of thoughts by means of better transparency and accountability,” and that to exhibit that TikTok “imagine[s] it’s important to indicate customers, advertisers, creators, and regulators that [they] are accountable and dedicated members of the American neighborhood that follows US legal guidelines.”

TikTok made waves this summer season when its CEO Kevin Mayer introduced on the corporate’s weblog that the corporate can be releasing its algorithms to regulators and referred to as on different corporations to do the identical. Mayer described this choice as a method to offer “peace of thoughts by means of better transparency and accountability,” and that to exhibit that TikTok “imagine[s] it’s important to indicate customers, advertisers, creators, and regulators that [they] are accountable and dedicated members of the American neighborhood that follows US legal guidelines.”

It’s hardly a coincidence that TikTok’s information broke the identical week that Fb, Google, Apple and Amazon have been set to testify in entrance of the Home Judiciary’s antitrust panel. TikTok has shortly risen as fierce competitors to those U.S.-based gamers, who acknowledge the aggressive risk TikTok poses, and have additionally cited TikTok’s Chinese language origin as a definite risk to safety of its customers and American nationwide pursuits. TikTok’s information alerts an intent to strain these corporations to extend their transparency as they push again on TikTok’s capability to proceed to function within the U.S.

Now, TikTok is once more within the information over the identical algorithms, as a deal for a possible sale of TikTok to a U.S.-based firm hit a roadblock with new uncertainty over whether or not or not its algorithms can be included within the sale. In keeping with the Wall Avenue Journal, “The algorithms, which decide the movies served to customers and are seen as TikTok’s secret sauce, have been thought of a part of the deal negotiations up till Friday, when the Chinese language authorities issued new restrictions on the export of artificial-intelligence expertise.”

This one-two punch of reports over TikTok’s algorithms raises two main questions:

1. What’s the worth of TikTok with or with out its algorithms?

2. Does the discharge of those algorithms truly enhance transparency and accountability?

The second query is what this publish will dive into, and will get to the idea upon which Fiddler was based: learn how to present extra reliable, clear, accountable AI.

TikTok’s AI Black Field

Whereas credit score is because of TikTok for opening up its algorithms, its hand was largely pressured right here. Earlier this 12 months, numerous articles expounded upon the potential biases inside the platform. Customers discovered that in a similar way to different social media platforms, TikTok advisable accounts based mostly accounts customers already adopted. However the suggestions weren’t solely comparable when it comes to kind of content material, however in bodily attributes equivalent to race, age, or facial traits (right down to issues like hair colour or bodily disabilities). In keeping with an AI researcher at UC Berkeley Faculty of Data, these suggestions get “weirdly particular – Faddoul discovered that hitting observe on an Asian man with dyed hair gave him extra Asian males with dyed hair.” Apart from criticisms round bias, the issues of opacity across the stage of Chinese language management and entry to the algorithms created added strain to extend transparency.

TikTok clearly made an announcement by responding to this strain and being the primary mover in releasing its algorithms on this method. However how will this launch truly impression lives? Regulators now have entry to the code that drives TikTok’s algorithms, its moderation insurance policies, and its information flows. However sharing this data doesn’t essentially imply that the way in which its algorithms make choices is definitely comprehensible. Its algorithms are largely a black field, and it’s incumbent on TikTok to equip regulators with the instruments to have the ability to see into this black field to elucidate the ‘how’ and ‘why’ behind the choices.

The Challenges of Black Field AI

TikTok is hardly alone within the problem of answering for the choices it is AI makes and eradicating the black field algorithms to extend explainability. Because the potential for software of AI throughout industries and use circumstances grows, new dangers have additionally emerged: over the previous couple years, there was a stream of reports about breaches of ethics, lack of transparency, and noncompliance as a result of black field AI. The impacts of this are far-reaching. This will imply unfavorable PR – information about corporations equivalent to as Quartz, Amazon’s AI-powered recruiting instrument, being biased in opposition to girls and Apple Card being investigated after gender discrimination complaints led to months of dangerous information tales for the businesses. And it’s not simply PR that corporations have to fret about. Rules are catching up with AI, and fines and regulatory impression are beginning to be actual issues – for instance, New York’s insurance coverage regulator lately probed UnitedHealth’s algorithm for racial bias. Payments and legal guidelines demanding explainability and transparency and growing customers’ rights are being handed inside the USA in addition to internationally, will solely enhance the dangers of non-compliance inside AI.

Past regulatory and monetary dangers, as customers change into extra conscious of the ubiquity of AI inside their on a regular basis lives, the necessity for corporations to construct belief with their clients grows extra vital. Customers are demanding accountability and transparency as they start to acknowledge the impression these algorithms can have on their lives, for points massive (credit score lending or hiring choices) and small (product suggestions on an ecommerce web site).

These points should not going away. If something, as AI turns into increasingly more prevalent in on a regular basis choice making and laws inevitably meet up with its ubiquity, corporations should put money into guaranteeing their AI is clear, accountable, moral, and dependable.

However what’s the resolution?

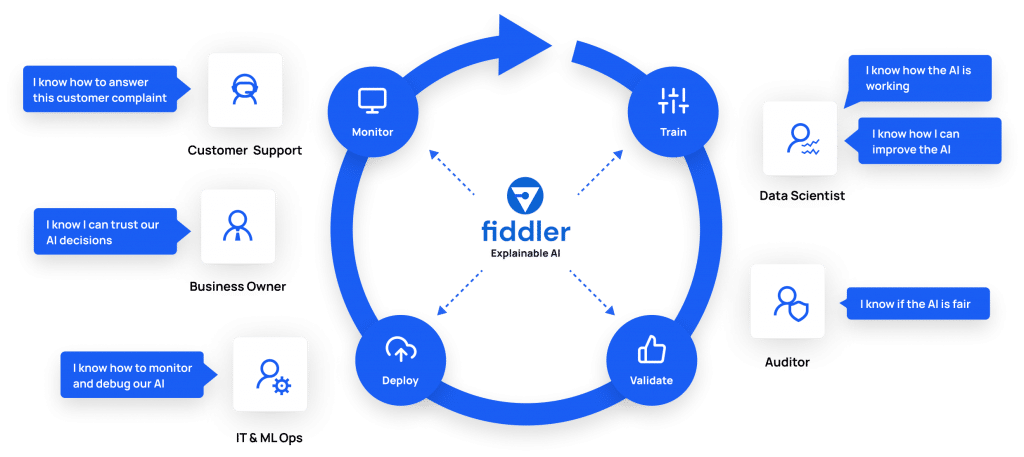

At Fiddler, we imagine that the important thing to that is visibility and transparency of AI techniques. With a purpose to root out bias inside fashions, you could first be capable of perceive the ‘how’ and ‘why’ behind issues to effectively root trigger points. When you already know why your fashions are doing one thing, you may have the ability to make them higher whereas additionally sharing this information to empower your total group.

However what’s Explainable AI? Explainable AI refers back to the course of by which the outputs (choices) of an AI mannequin are defined within the phrases of its inputs (information). Explainable AI provides a suggestions loop to the predictions being made, enabling you to elucidate why the mannequin behaved in the way in which it did for that given enter. This lets you present clear and clear choices and construct belief within the outcomes.

Explainability by itself is essentially reactive. Along with with the ability to clarify the outcomes of your mannequin, you could be capable of repeatedly monitor information that’s fed into the mannequin. Steady monitoring offers you the power to be proactive somewhat than reactive – that means you possibly can drill down into key areas and detect and deal with points earlier than they get out of hand.

Explainable monitoring will increase transparency and actionability throughout the complete AI lifecycle. This instills belief in your fashions to stakeholders inside and outdoors of your group, together with enterprise house owners, clients, buyer help, IT and operations, builders, and inside and exterior regulators.

Whereas a lot is unknown about the way forward for AI, we will be sure that the necessity for accountable and comprehensible fashions will solely enhance. At Fiddler, we imagine there’s a want for a brand new form of Explainable AI Platform that permits organizations to construct accountable, clear, and comprehensible AI options and we’re working with a variety of shoppers throughout industries, from banks to HR corporations, from Fortune 100 corporations to startups within the rising expertise area, empowering them to just do that.

Should you’d wish to be taught extra about learn how to unlock your AI black field and rework the way in which you construct AI into your techniques, tell us.