Our Generative AI Meets Accountable AI summit included an excellent panel dialogue centered on the most effective practices for accountable AI. The panel was moderated by Fiddler’s personal Chief AI Officer and Scientist, Krishnaram Kenthapadi and included these achieved panelists: Ricardo Baeza-Yates (Director of Analysis, Institute for Experiential AI Northeastern College), Toni Morgan (Accountable Innovation Supervisor, TikTok), and Miriam Vogel (President and CEO, EqualAI; Chair, Nationwide AI Advisory Committee). We rounded up the highest three key takeaways from this panel.

TAKEAWAY 1

ChatGPT and different LLMs could exacerbate the worldwide digital divide

Ricardo Baeza-Yates confirmed how inaccurate ChatGPT will be by demonstrating inaccuracies present in his biography generated by ChatGPT 3.5 and GPT-4, together with false employment historical past, awards, and incorrect beginning info, notably that GPT 3.5 states that he’s deceased. Regardless of an replace to GPT-4, the speaker notes that the brand new model nonetheless incorporates errors and inconsistencies.

He additionally recognized points with translations into Spanish and Portuguese, observing that the system generates hallucinations by offering totally different false details and inconsistencies in these languages. He emphasised the issue of restricted language assets, with solely a small fraction of the world’s languages having enough assets to assist AI fashions like ChatGPT. This challenge contributes to the digital divide and exacerbates world inequality, as those that communicate less-supported languages face restricted entry to those instruments.

Moreover, the inequality in schooling could worsen on account of uneven entry to AI instruments. These with the schooling to make use of these instruments could thrive, whereas others with out entry or information could fall additional behind.

TAKEAWAY 2

Balancing automation with human oversight is essential

Toni Morgan shared the next insights from their work at Tiktok. AI can inadvertently perpetuate biases and significantly struggles with context, sarcasm, and nuances in languages and cultures,, which can result in unfair therapy of particular communities. To counter this, groups should work intently collectively to develop methods that guarantee AI equity and forestall inadvertent impacts on specific teams. When points come up with the methods’ means to discern between hateful and reappropriated content material, collaboration with content material moderation groups is important. This requires ongoing analysis, updating group pointers, and demonstrating dedication to leveling the taking part in subject for all customers.

Neighborhood pointers are wanted and useful that cowl hate speech, misinformation, and specific materials. Nonetheless, AI-driven content material moderation methods usually battle with understanding context, sarcasm, reappropriation, and nuances in several languages and cultures. Addressing these challenges necessitates diligent work to make sure the correct choices are made in governing content material and avoiding incorrect content material choices.

Balancing automation with human oversight is a problem that spans throughout the trade. Whereas AI-driven methods supply important advantages when it comes to effectivity and scalability, relying solely on them for content material moderation can result in unintended penalties. Putting the correct steadiness between automation and human oversight is essential to making sure that machine studying fashions and methods reduce mannequin bias whereas aligning with human values and societal norms.

TAKEAWAY 3

Reaching accountable AI requires inside accountability and transparency

Miriam Vogel provided the following pointers: Guarantee accountability by designating a C-suite government answerable for main choices or points, offering a transparent level of contact. Standardize processes throughout the enterprise to construct belief inside the firm and among the many common public. Whereas exterior AI rules are essential, firms can take inside steps to speak the trustworthiness of their AI methods. Documentation is a crucial facet of excellent AI hygiene, together with recording testing procedures, frequency, and making certain the knowledge stays accessible all through the AI system’s lifespan. Set up common audits to keep up transparency about testing and its aims, in addition to any limitations within the course of. The NIST AI Threat Administration Framework, launched in January, gives a voluntary, law-agnostic, and use case-agnostic steerage doc developed with enter from world stakeholders throughout industries and organizations. This framework offers greatest practices for AI implementation, however with various world requirements, this system goals to carry collectively trade leaders and AI specialists to additional outline greatest practices and focus on methods to operationalize them successfully.

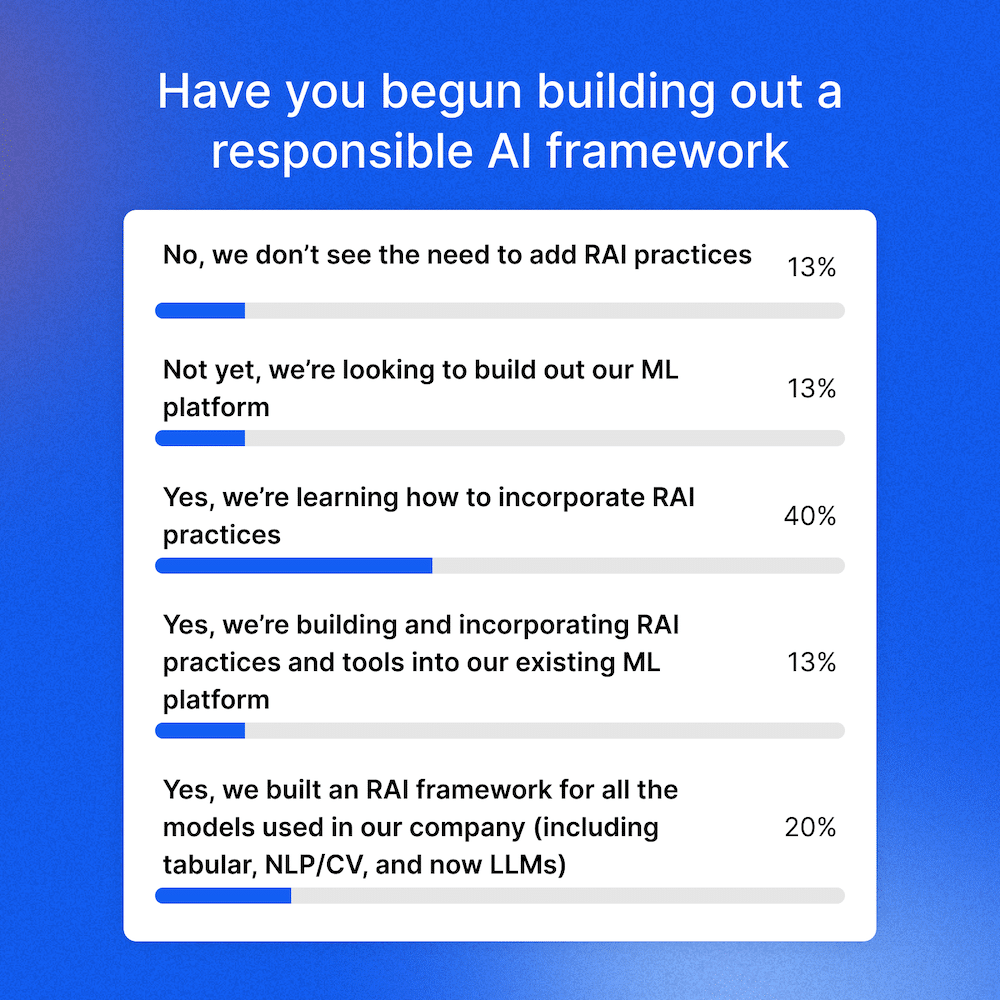

We requested the viewers whether or not or not they’d begun constructing out a accountable AI framework and acquired the next response:

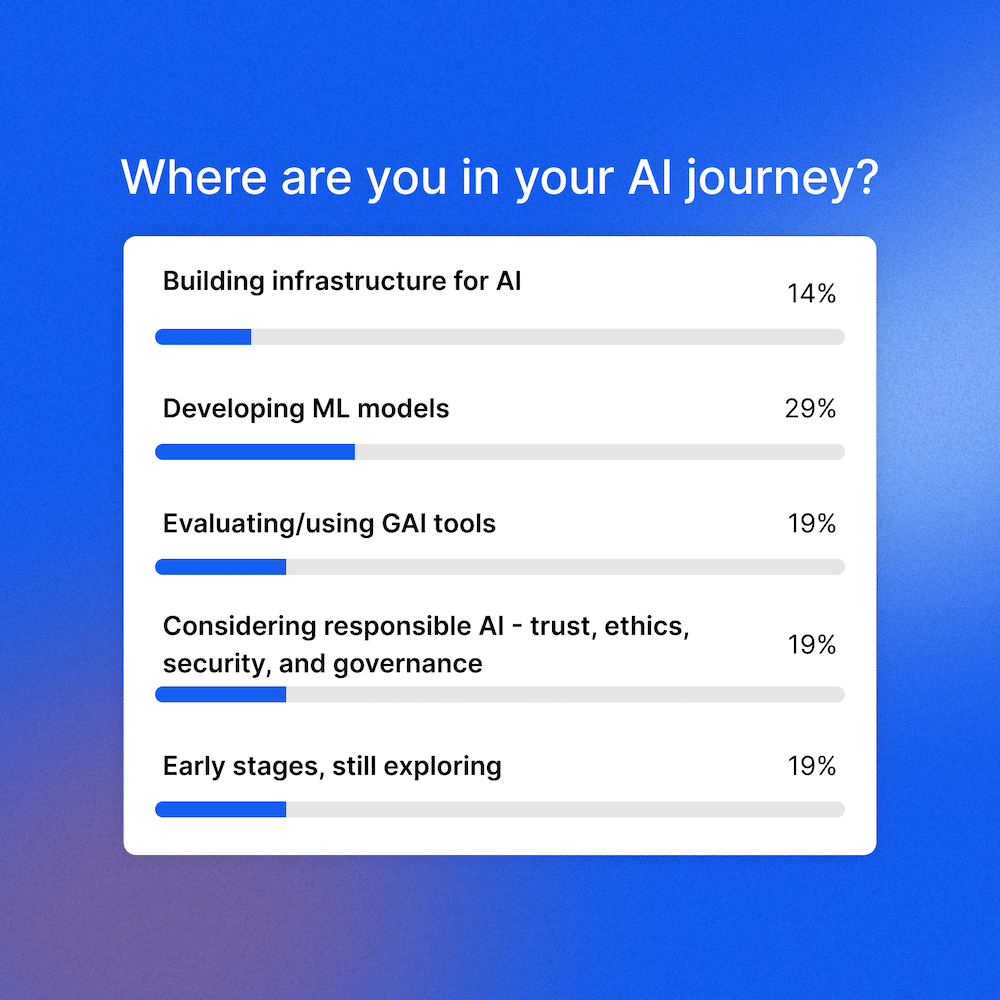

We then requested the viewers the place they had been of their AI journey:

Watch the remainder of the Generative AI Meets Accountable AI classes.