Up to now three years, Zillow invested lots of of thousands and thousands of {dollars} into Zillow Affords, its AI-enabled home-flipping program. The corporate supposed to make use of ML fashions to purchase up hundreds of homes per 30 days, whereupon the houses could be renovated and offered for a revenue. Sadly, issues didn’t go to plan. Not too long ago, information got here out that the corporate is shutting down its iBuying program that overpaid hundreds of homes this summer time, together with shedding 25 p.c of its employees. Zillow CEO Wealthy Barton mentioned the corporate didn’t predict home worth appreciation precisely: “We’ve decided the unpredictability in forecasting house costs far exceeds what we anticipated.”

With information like Zillow’s changing into an increasing number of frequent, it’s clear that the financial alternatives AI presents don’t come with out dangers. Corporations using AI face enterprise, operational, moral, and compliance dangers related to implementing AI. When not addressed, these points can result in actual enterprise impression, lack of consumer belief, unfavorable publicity, and regulatory motion. Corporations differ extensively within the scope and strategy taken to deal with these dangers, largely as a result of various laws governing completely different industries.

That is the place we will all be taught from the monetary providers business. Through the years, banks have carried out insurance policies and techniques designed to safeguard towards the potential adversarial results of fashions. After the 2008 monetary debacle, banks needed to adjust to the SR 11-7 regulation, the intent of which was to make sure banking organizations have been conscious of the adversarial penalties (together with monetary loss) of choices primarily based on AI. Consequently, all monetary providers companies have carried out some type of mannequin threat administration (MRM).

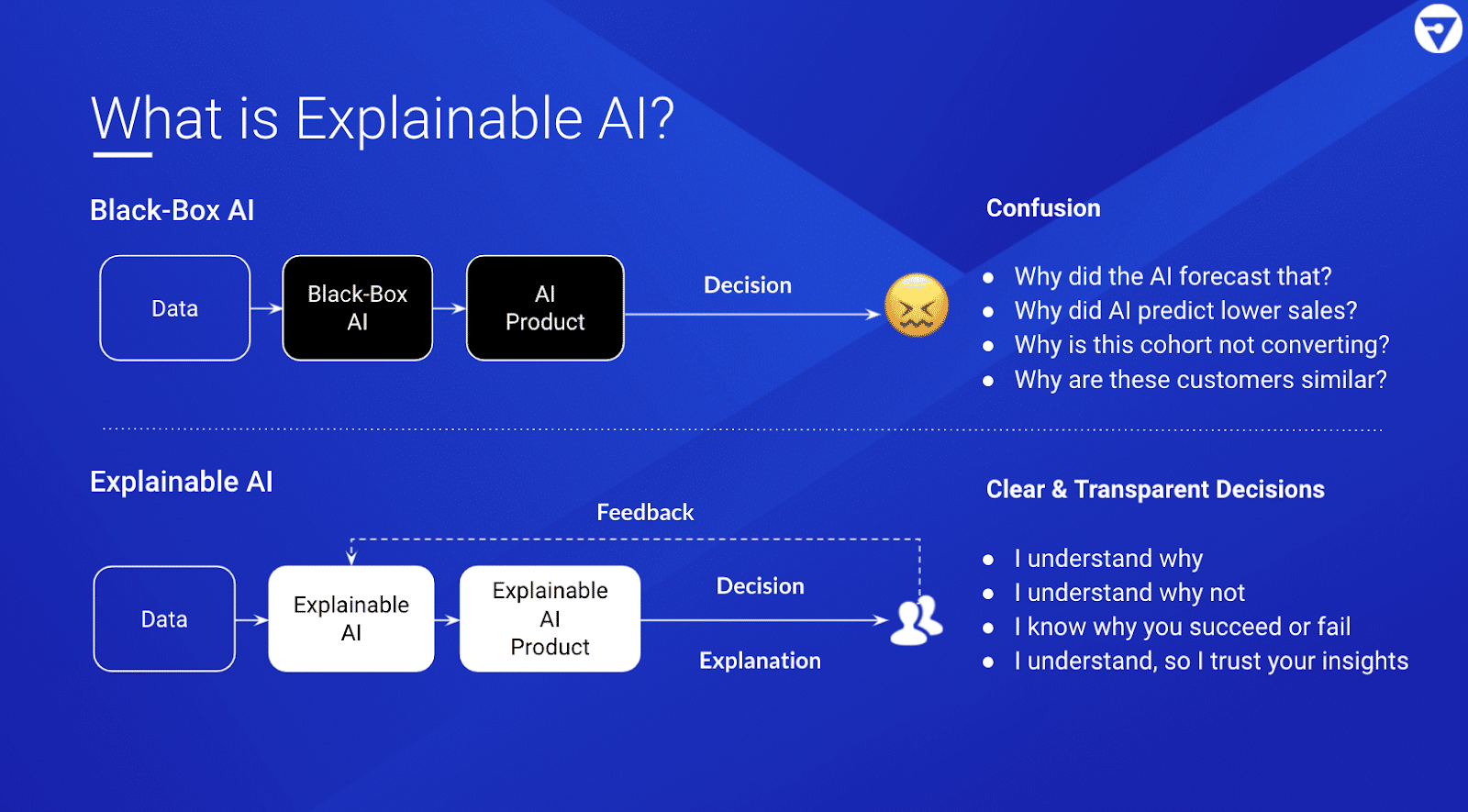

On this article, we try to explain what MRM means and the way it might have helped in Zillow’s case. Mannequin threat administration is a course of to evaluate all of the potential dangers that organizations can incur because of selections being made by incorrect or misused fashions. MRM requires understanding how a mannequin works, not solely on current information however on information not but seen. As organizations undertake ML fashions which might be more and more changing into a black field, fashions have gotten tougher to grasp and diagnose. Allow us to look at rigorously what we have to perceive and why. From regulation SR 11-7: Steering on Mannequin Danger Administration:

Mannequin threat happens primarily for 2 causes: (1) a mannequin might have basic errors and produce inaccurate outputs when seen towards its design goal and supposed enterprise makes use of; (2) a mannequin could also be used incorrectly or inappropriately or there could also be a misunderstanding about its limitations and assumptions. Mannequin threat will increase with higher mannequin complexity, larger uncertainty about inputs and assumptions, broader extent of use, and bigger potential impression.

Subsequently, it’s paramount to grasp the mannequin to mitigate the chance of errors, particularly when utilized in an unintended manner, incorrectly, or inappropriately. Earlier than we dive into the Zillow state of affairs, it’s greatest to make clear that this isn’t a straightforward factor to resolve in a company — implementing mannequin threat administration isn’t only a matter of putting in a Python or R bundle.

Mannequin Danger in Zillow’s case

As consumer @galenward tweeted just a few weeks in the past, it might be fascinating to search out out the place within the ML stack Zillow’s failure lives:

- Was it an incorrect or inappropriate use of ML?

- An excessive amount of belief in ML?

- Aggressive administration that would not take “we aren’t prepared” for a solution?

- Incorrect KPIs?

We will solely hypothesize what might have occurred in Zillow’s case. However since predicting home costs is a fancy modeling drawback, there are 4 areas the place we’d wish to focus on this article:

- Information Assortment and High quality

- Information Drift

- Mannequin Efficiency Monitoring

- Mannequin Explainability

Information Assortment and High quality

One of many issues, once we mannequin issues which might be asset-valued, is that they depreciate primarily based on human utilization, and it turns into essentially exhausting to mannequin them. For instance, how can we get information into how nicely a home is being maintained? Let’s say there are two similar homes in the identical zip code, the place every is 2,100 sq. ft and has the identical variety of bedrooms and bogs — how do we all know one was higher maintained than the opposite?

A lot of the information in actual property appear to return from quite a lot of MLS information sources that are maintained at a regional stage and are susceptible to variability. These information sources accumulate house situation assessments, together with footage of the home and extra metadata. Theoretically, an organization like Zillow might apply refined AI/ML strategies to evaluate the home high quality. Nonetheless, there are such a lot of lots of of MLS boards and the feeds from completely different geographies can have information high quality points.

Moreover, there are international market circumstances equivalent to rates of interest, GDP, unemployment, and provide and demand available in the market that would have an effect on house costs. To illustrate the unemployment charge is basically excessive, so individuals aren’t being profitable and might’t afford to pay their mortgages. We’d have plenty of homes available on the market due to an extra of provide and a scarcity of demand. On the flip facet, if unemployment goes down and the economic system is doing nicely, we would see a housing market growth.

There are all the time going to be issues that impression a house worth that may’t be simply measured and included in a mannequin. The query right here is basically twofold: First, how ought to an organization like Zillow accumulate information on components that may have an effect on costs, from a selected house’s situation to the final situation of the economic system? And second, how ought to the enterprise perceive and account for information high quality points?

Information Drift

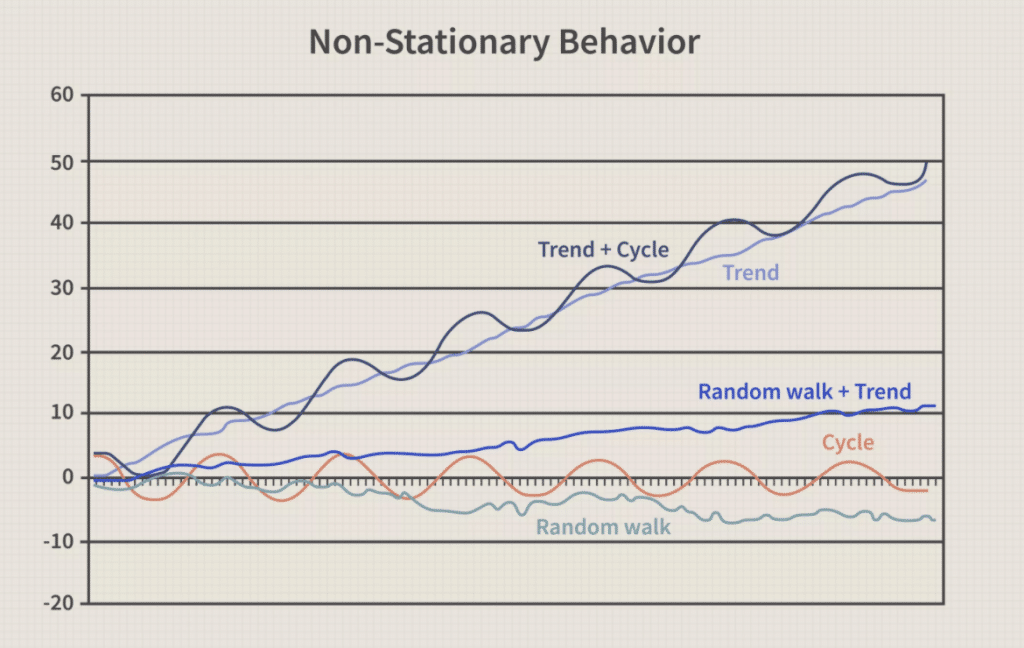

The world is altering always with time. Though the worth of a home might not range a lot day-to-day, we do see worth modifications over intervals of time occur because of home depreciation in addition to market circumstances. This can be a problem to mannequin. Whereas it’s clear that the costs over time comply with a non-stationary sample, it’s additionally exhausting to collect plenty of time sequence information on worth fluctuations round a single house.

Varieties of non-stationary conduct. Dwelling costs sometimes comply with a development + cycle sample.

Varieties of non-stationary conduct. Dwelling costs sometimes comply with a development + cycle sample.

So, groups usually resort to cross-sectional information on house-specific variables equivalent to sq. footage, yr constructed, or location. Cross-sectional information consult with observations of many alternative information factors at a given time, every statement belonging to a distinct information level. Within the case of Zillow, cross-sectional information could be the worth for every of 1,000 randomly chosen homes in San Francisco for the yr 2020.

Cross-sectional information is, in fact, generalized historic information. That is the place threat is available in, as a result of implicit within the machine studying strategy of dataset development, mannequin coaching, and mannequin analysis is the belief that the longer term would be the similar because the previous. This assumption is named the stationarity assumption: the concept processes or behaviors which might be being modeled are stationary by time (i.e., they do not change). This assumption lets us simply use quite a lot of ML algorithms from boosted bushes, random forests, or neural networks to mannequin situations—nevertheless it exposes us to potential issues down the road.

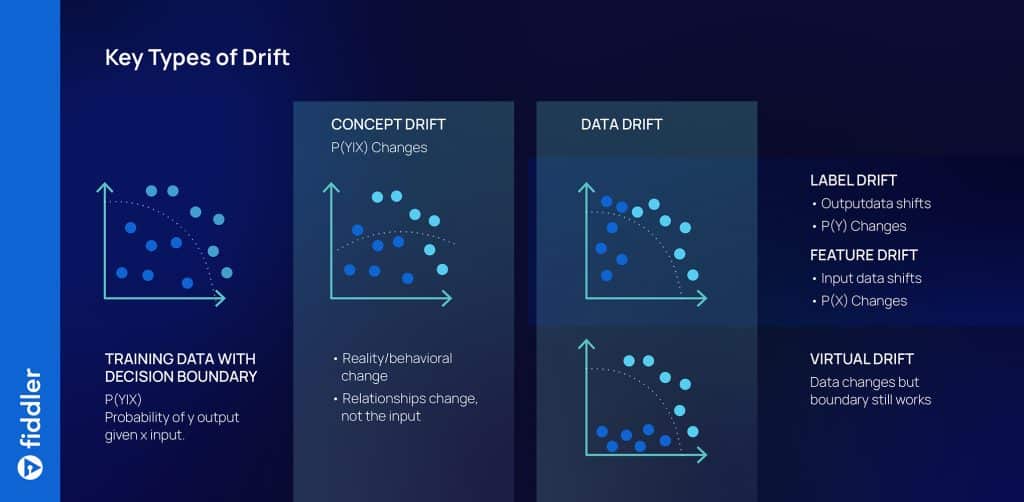

If (as is normally the case in the actual world) the information shouldn’t be stationary, the relationships the mannequin learns will shift with time and turn out to be unreliable. Information scientists use a time period known as information drift to explain how a course of or conduct can change, or drift, as time passes. In impact, ML algorithms search by the previous for patterns that may generalize to the longer term. However the future is topic to fixed change, and manufacturing fashions can deteriorate in accuracy over time because of information drift. There are three varieties of knowledge drift that we’d like to pay attention to: idea drift, label drift, and have drift. A number of of a majority of these information drift might have triggered Zillow’s fashions to deteriorate in manufacturing.

Varieties of information drift: idea drift, label drift, and have drift

Varieties of information drift: idea drift, label drift, and have drift

Mannequin Efficiency Monitoring

Mannequin efficiency monitoring is important, particularly when an operationalized ML mannequin might drift and doubtlessly deteriorate in a brief period of time as a result of non-stationary nature of the information. There are a selection of MLOps monitoring instruments that present fundamental to superior mannequin efficiency monitoring for ML groups in the present day. Whereas we don’t know what sort of monitoring Zillow had configured for his or her fashions, most options provide some type of the next:

- Efficiency metric monitoring: Metrics like MAPE, MSE, and so forth. might be tracked in a central place for all enterprise stakeholders, with alerting arrange in order that if mannequin efficiency crossed a threshold for any of those metrics, the crew might take motion instantly.

- Monitoring for information drift: By monitoring the characteristic values, shifts within the characteristic information, and the way the characteristic importances could be shifting, the crew can have indicators for when the mannequin would possibly have to be retrained. Moreover, in Zillow’s case it might have been a good suggestion to additionally monitor for modifications in macroeconomic components like unemployment charge, GDP, and provide and demand throughout completely different geographical areas.

Mannequin Explainability

Whereas we don’t know a lot about Zillow’s AI methodologies, they appear to have made some investments in Explainable AI. Mannequin explanations assist present extra info and instinct about how a mannequin operates, and scale back the uncertainty that it will likely be misused. There are each business and open-source instruments obtainable in the present day to give groups a deeper understanding of their fashions. Listed here are a few of the commonest explainability strategies together with examples of how they could have been utilized in Zillow’s case:

Native occasion explanations: Given a single information occasion, quantify every characteristic’s contribution to the prediction.

- Instance: Given a home and its predicted worth of $250,000, how essential was the yr of development vs. the variety of bogs in contributing to its worth?

Occasion clarification comparisons: Given a set of knowledge situations, examine the components that result in their predictions.

- Instance: Given 5 homes in a neighborhood, what distinguishes them and their costs?

Counterfactuals: Given a single information occasion, ask “what if” questions to watch the impact that changed options have on its prediction.

- Instance: Given a home and its predicted worth of $250,000, how would the worth change if it had an additional bed room?

- Instance: Given a home and its predicted worth of $250,000, what would I’ve to alter to extend its predicted worth to 300,000 {dollars}?

Nearest neighbors: Given a single information occasion, discover information situations with comparable options, predictions, or each.

- Instance: Given a home and its predicted worth of $250,000, what different homes have comparable options, worth, or each?

- Instance: Given a home and a binary mannequin prediction that claims to “purchase,” what’s the most comparable actual house that the mannequin predicts “to not purchase”?

Areas of error: Given a mannequin, find areas of the mannequin the place prediction uncertainty is excessive.

- Instance: Given a home worth prediction mannequin educated totally on older houses starting from $100,000 – 300,000, can I belief a mannequin’s prediction {that a} newly constructed home prices 400,000 {dollars}?

Characteristic significance: Given a mannequin, rank the options of the information which might be most influential to the general predictions.

- Instance: Given a home worth prediction mannequin, does it make sense that the highest three most influential options needs to be the sq. footage, yr constructed, and placement?

For a high-stakes use-case like Zillow’s, the place a mannequin’s selections might have a huge effect on the enterprise, it is essential to have a human-in-the-loop ML workflow with investigations and corrections enabled by explainability.

Conclusion

AI may help develop and scale companies and generate unbelievable outcomes. But when we don’t handle dangers correctly, or markets flip towards the assumptions now we have made, then outcomes can go from dangerous to catastrophic.

We actually do not know Zillow’s methodology and the way they managed their mannequin threat. It’s potential that the crew’s greatest intentions have been overridden by aggressive administration. And it’s actually potential that there have been broader operational points—such because the processes and labor wanted to flip houses shortly and effectively. However one factor is obvious: It’s crucial that organizations working in capital-intensive areas set up a robust threat tradition round how their fashions are developed, deployed, and operated.

On this space, different industries have a lot to be taught from banking, the place regulation SR 11-7 offers steering on mannequin threat administration. We’ve lined 4 key areas that issue into mannequin threat administration: information assortment and high quality, information drift, mannequin efficiency monitoring, and explainability.

Sturdy mannequin threat administration is essential for each firm operationalizing AI for his or her essential enterprise workflows. ML fashions are extraordinarily exhausting to construct and function, and far will depend on our assumptions and the way we outline the issue. MRM as a course of may help scale back the dangers and uncertainties alongside the best way.