We noticed AI within the highlight loads in 2021, however not at all times for the precise causes. Probably the most pivotal moments for the trade was the revelation of the “Fb Recordsdata” in October. Former Fb product supervisor and whistleblower, Frances Haugen, testified earlier than a Senate subcommittee on methods Fb algorithms “amplified misinformation” and the way the corporate “persistently selected to maximise development slightly than implement safeguards on its platforms.” (Supply: NPR). It was an awakening for everybody — from laypeople unaware of the methods they interacted with AI and triggered algorithms, to expert engineers constructing modern, AI-powered merchandise and options. We — the AI trade collectively — need to and may do higher.

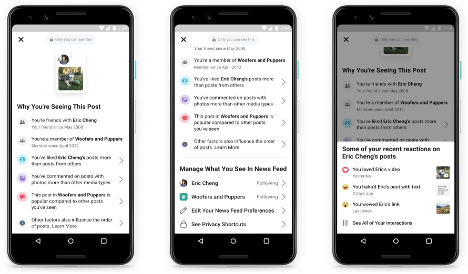

Why am I seeing this? (supply: Fb)

Why am I seeing this? (supply: Fb)

As a former engineer on the Information Feed workforce at Fb, one who labored on the corporate’s “Why am I seeing this?” utility, which tries to elucidate to customers the logic behind submit rankings, I used to be saddened by Haugen’s testimony exposing, amongst different issues, Fb’s algorithmic opaqueness. After the 2016 elections, my workforce at Fb began engaged on placing guardrails round Information Feed AI algorithms, checking for knowledge integrity, and constructing debugging and diagnostic tooling to know and clarify how they work.

Options like “Why am I seeing this?’’ began to deliver much-needed AI transparency to the Information Feed for each inner and exterior customers. I started to see that issues like these weren’t intractable, however in actual fact solvable. I additionally started to know that they weren’t only a “Fb drawback,” however have been prevalent throughout the enterprise.

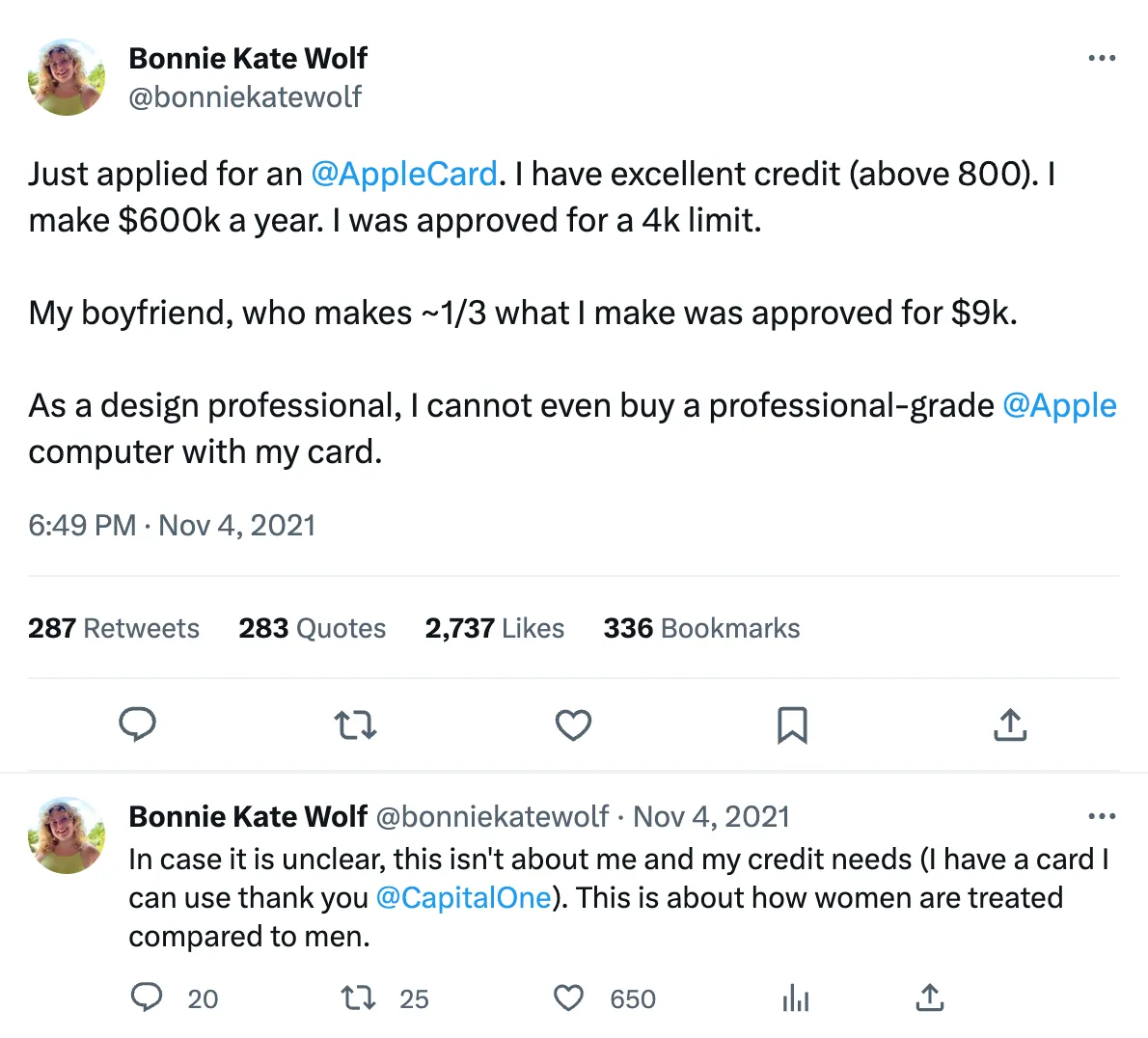

Customers complaining on Twitter about Apple Card’s gender bias (supply: Twitter)

Customers complaining on Twitter about Apple Card’s gender bias (supply: Twitter)

Two years in the past, for instance, Apple and Goldman Sachs have been accused of credit-card bias. What began as a tweet thread with a number of studies of alleged bias (together with from Apple’s very personal co-founder, Steve Wozniak, and his partner), finally led to a regulatory probe into Goldman Sachs and their AI prediction practices.

As specified by the tweet thread, Apple Card’s customer support reps have been rendered powerless to the AI’s determination. Not solely did they haven’t any perception into why sure choices have been made, additionally they have been unable to override them. And but it’s nonetheless occurring.

The place Do We Go from Right here?

Algorithmic opacity and bias aren’t only a “tech firm” drawback, they’re an equal alternative menace. Right this moment, AI is getting used in every single place from credit score underwriting and fraud detection to scientific prognosis and recruiting. Wherever a human has been tasked with making choices, AI is both now helping in these choices or has taken them over.

People, nonetheless, don’t desire a future dominated by unregulated, capricious, and doubtlessly biased AI. Civic society must get up and maintain giant companies accountable. Nice work is being completed in elevating consciousness by folks like Pleasure Buolamwini, who began the Algorithmic Justice League in 2020, and Jon Iwata, founding govt director of the Knowledge & Belief Alliance. To make sure each firm follows the trail of Tiktok and discloses their algorithms to regulators, we’d like strict legal guidelines. Congress has been sitting on the Algorithmic Accountability Act since June 2019. It’s time to act rapidly and move the invoice in 2022.

But whilst we communicate, new AI methods are being set as much as make choices that dramatically affect people, and that warrants a a lot nearer look. Folks have a proper to know the way choices affecting their lives have been made, and meaning explaining AI.

However the actuality is, that’s getting tougher and tougher to do. “Why am I seeing this?” was a very good religion try at doing so — I do know, I used to be there — however I also can say that the AI at Fb has grown enormously complicated, changing into much more of a black field.

The issue we face right now as world residents is that we don’t know what knowledge the AI fashions are being constructed on, and these fashions are those our banks, docs, and employers are utilizing to make choices about us. Worse, most corporations utilizing these fashions don’t know themselves.

We have now to hit the pause button. A lot depends upon us getting this proper. And by “this,” I imply AI and its use in choices that affect people

That is additionally why I based an organization devoted to creating AI clear and explainable. To ensure that people to construct belief with AI, it must be “clear.” Corporations want to know how their AI works and be capable of clarify their workings to all stakeholders.

Additionally they have to know what knowledge their methods have been, and are, being educated on, as a result of if incomplete or biased (whether or not inadvertently or deliberately), the flawed choices they make might be strengthened perpetually. After which it’s actually the businesses, leaders, and all of us which can be being unethical.

Getting It Proper

So what’s the repair? How can we guarantee the current, future and ongoing upward trajectory of AI is moral and that AI is as a lot as attainable at all times used for good? There are three steps to getting this proper:

- Making AI “explainable” is a crucial first step, which in flip creates a necessity for folks, software program, and companies that may 1) deconstruct present AI purposes and determine their knowledge and algorithmic roots and taxonomies and a pair of) make sure the recipes of latest AI purposes are 100% clear. For this to work, in fact, corporations have to be well-intentioned. Explainable AI solely ends in Moral AI if corporations need it to. That stated, corporations mustn’t view Moral AI as a price to their enterprise. Good Ethics is nice for enterprise.

- Regulating AI is just not solely a logical subsequent step, I’d argue it’s an pressing one. The potential for AI to be abused, and the ramifications of that abuse so morally, socially, and economically important, is such that we are able to’t simply depend on self-policing. AI wants a regulatory physique analogous to the one imposed on the monetary companies trade after the 2008 monetary disaster – keep in mind “too large to fail”? With AI, the stakes are simply as excessive, or greater, and we’d like a watchdog, one with enamel, to actively information and monitor future AI deployments. AI laws might present a standard working framework, arrange a degree taking part in area, and maintain corporations accountable – a boon for all stakeholders.

- A framework just like the one used for Environmental, Social, Governance (ESG) reporting can be an enormous worth add. Right this moment, corporations all over the world are being held accountable by shareholders, provide chain companions, boards, and clients to decarbonize their operations to assist meet local weather targets. ESG reporting makes company sustainability efforts extra clear to stakeholders and regulators. AI would profit from an identical framework.

Till AI is “explainable,” it will likely be not possible to make sure it’s “moral.” My hope is that we be taught from years of inaction on local weather change that getting this proper, now, is vital to the current and future wellbeing of humankind.

That is solvable. We all know what we have to do and have fashions we are able to comply with. Fiddler and others are offering sensible instruments, and governing our bodies are taking discover. We are able to now not abdicate accountability for the issues we create. Collectively, we are able to make 2022 a milestone yr for AI, implementing regulation that gives transparency and finally restores belief within the know-how.