I had the pleasure to take a seat down with Patrick Corridor from BNH.AI, the place he advises shoppers on AI threat and researches mannequin threat administration for NIST’s AI Threat Administration Framework, in addition to instructing knowledge ethics and machine studying at The George Washington Faculty of Enterprise. Patrick co-founded BNH and led H2O.ai’s efforts in accountable AI. I had a ton of questions for Patrick and he had a couple of spicy take. Should you don’t have time to hearken to the total interview (however I’m biased and advocate that you simply do), listed here are a number of takeaways from our dialogue.

TAKEAWAY 1

To make AI methods accountable, begin with the NIST Threat Administration framework, present explanations and recourse to the tip consumer to enchantment improper selections, and set up mannequin governance constructions which are separate from the know-how aspect of their group.

Patrick recommends referring to the NIST AI Threat Administration framework, which was launched in January 2023. There are a lot of accountable AI or reliable AI checklists out there on-line, however essentially the most dependable ones have a tendency to return from organizations like NIST and ISO (Worldwide Requirements Group). Each NIST and ISO develop totally different requirements, however they work collectively to make sure alignment.

ISO gives in depth checklists for validating machine studying fashions and making certain neural community reliability, whereas NIST focuses extra on analysis and synthesizes that analysis into steering. NIST has lately revealed a complete AI threat administration framework, model one, which is without doubt one of the greatest world assets out there.

Now, on the subject of accountability, there are a number of key components. One direct strategy entails explainable AI, which permits for actionable recourse. By explaining how a choice was made and providing a course of for interesting that call, AI methods can change into extra accountable. That is essentially the most essential side of AI accountability, for my part.

One other side entails inner governance constructions, like appointing a chief mannequin threat officer who’s solely answerable for AI threat and mannequin governance. This particular person must be well-compensated, have a big employees and finances, and be unbiased of the know-how group. Ideally, they might report back to the board threat committee and be employed and fired by the board, not the CEO or CTO.

TAKEAWAY 2

People like Elon Musk and main AI firms might signal letters warning of AGI for strategic causes. These conversations distract from the true and addressable issues with AI.

Patrick shared his opinion that Tesla wants the federal government and customers to be confused in regards to the present state of self-driving AI, which is inferior to it is typically portrayed. OpenAI desires their opponents to decelerate, as a lot of them have extra funding and personnel. He believes that is the first purpose behind these firms signing such letters.

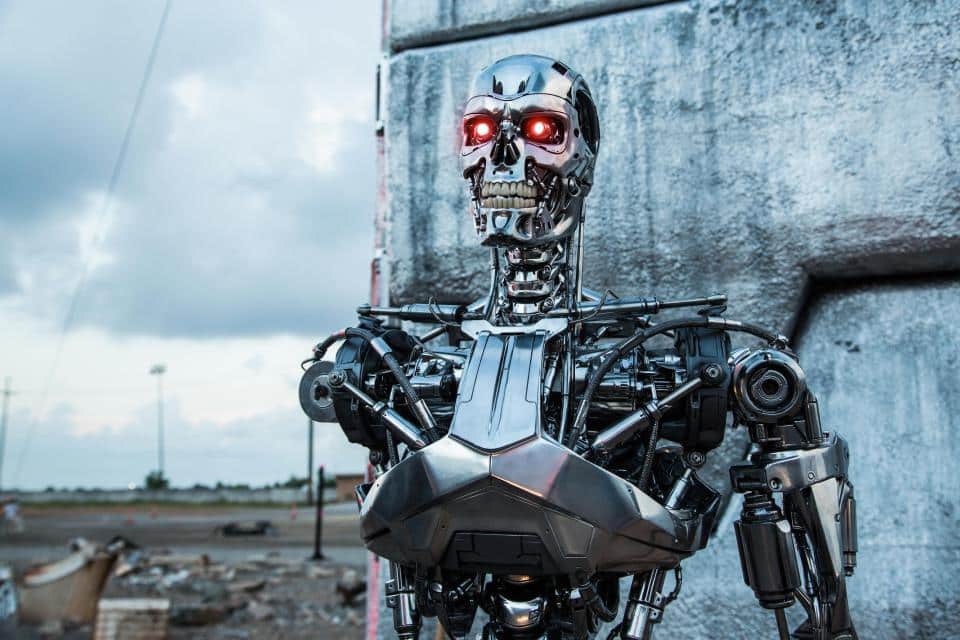

These discussions create noise and divert AI threat administration assets towards imagined future catastrophic dangers, just like the Terminator state of affairs. Such issues are thought-about faux and, within the worst instances, deceitful. Patrick emphasised that we’re not near any Skynet or Terminator state of affairs, and the intentions of those that advocate for shifting assets in that path must be questioned.

Paramount Photos

Paramount Photos

TAKEAWAY 3

Utilizing laborious metrics just like the AI indecent database to encourage the adoption of accountable AI practices is best than utilizing ideas like “equity”.

Massive organizations and people typically wrestle with ideas like AI equity, privateness, and transparency, as everybody has totally different expectations concerning these ideas. To keep away from these difficult points, incidents — undebatable, destructive occasions that price cash or hurt folks — could be a helpful focus for selling accountable AI adoption.

The AI incident database is an interactive, searchable index containing 1000’s of public experiences on greater than 500 recognized AI and machine studying system failures. The database serves two most important functions: info sharing to forestall repeated incidents and elevating public consciousness of those failures, probably discouraging high-risk AI deployments.

A main instance of mishandling AI threat is the chatbot launched in South Korea in 2021, which began making denigrating feedback about numerous teams of individuals and needed to be shut down. This incident intently mirrored Microsoft’s high-profile Tay chatbot failure. The repetition of such well-known AI incidents signifies the shortage of maturity in AI system design. The AI incident database goals to share details about these failed designs to forestall organizations from repeating previous errors.

Patrick had many extra takeaways and tidbits that will help you handle your AI threat and align with upcoming AI laws. To study extra watch your entire webinar on-demand!