A lot has been written concerning the significance of addressing dangers in AI—whether or not it is attempting to keep away from high-profile snafus or protecting fashions steady throughout sudden adjustments within the knowledge. For options to those points, the monetary companies business is one to look at. They pioneered the concept of Mannequin Danger Administration (MRM) greater than 15 years in the past. However fashions have modified drastically since then, and the wants and options have modified as properly. On the Ai4 2021 Finance Summit, Fiddler Founder & CEO Krishna Gade chatted with Sri Krishnamurthy, Founding father of QuantUniversity, to study extra about how monetary establishments are tackling the issues with black field ML. Watch the complete dialog right here.

From Mannequin Danger Administration to Accountable AI

“Managing fashions within the monetary business will not be one thing new,” Sri mentioned. As he defined, the monetary business led the way in which in establishing a course of for evaluating the implication of fashions. Up to now, with stochastic fashions, the essential concept was to pattern the inhabitants and make assumptions that may be utilized extra broadly. These comparatively linear, simplistic assumptions could possibly be defined clearly—and totally described in a instrument like MatLab.

Nonetheless, machine studying has launched solely new methodologies. AI fashions are rather more complicated. They’re high-dimensional and always adapt to the setting. How do you clarify to the end-user what the mannequin is doing behind the scenes? Accountable AI is about with the ability to carry out this contextualization, and likewise placing a well-defined framework behind the adoption and integration of those newer applied sciences.

Accountable AI primarily goes hand in hand with fiduciary accountability. Selections made by fashions can have an effect on particular person belongings and even society as a complete. “In case you are an organization which provides thousands and thousands and thousands and thousands of bank cards to individuals, and you’ve got constructed a mannequin which has both an specific or an implicit bias in it, there are systemic results,” Sri mentioned. That’s why it’s so necessary for the monetary business to essentially perceive fashions and have the best practices in place, relatively than simply speeding to undertake the newest AutoML or deep neural community applied sciences.

The challenges of validating ML fashions

After we requested Sri concerning the challenges of validating fashions, a key issue was the variation in how groups are adopting ML throughout the business. For instance, one group might need 15 PhDs creating fashions internally, whereas one other might need their MRM workforce of 1-2 people, who’re in all probability not specialists in ML, utilizing machine studying as a service, calling an API, and consuming the output. Or they is perhaps integrating an open supply mannequin that they didn’t construct themselves.

Groups who’re creating fashions internally are higher ready to know how the fashions work, however conventional MRM groups might not be as well-equipped. Their objectives are twofold: to confirm that the mannequin is powerful, and to validate that the mannequin is utilized in the best situations and might deal with any potential challenges from an end-user. With black field fashions, “you’re sort of flying at nighttime,” Krishnamurthy mentioned. Instruments for Explainable AI are an necessary piece of the puzzle.

Human error can complicate mannequin validation as properly. “Fashions usually are not essentially automated—there are at all times people within the loop,” Sri defined. This could result in the so-called “fats finger impact” the place an analyst makes a mistake within the mannequin enter: as an alternative of .01, they enter 0.1. With MRM groups demanding a seat on the desk to handle these sorts of issues, the mannequin validation subject itself is evolving.

Mannequin lifecycle administration

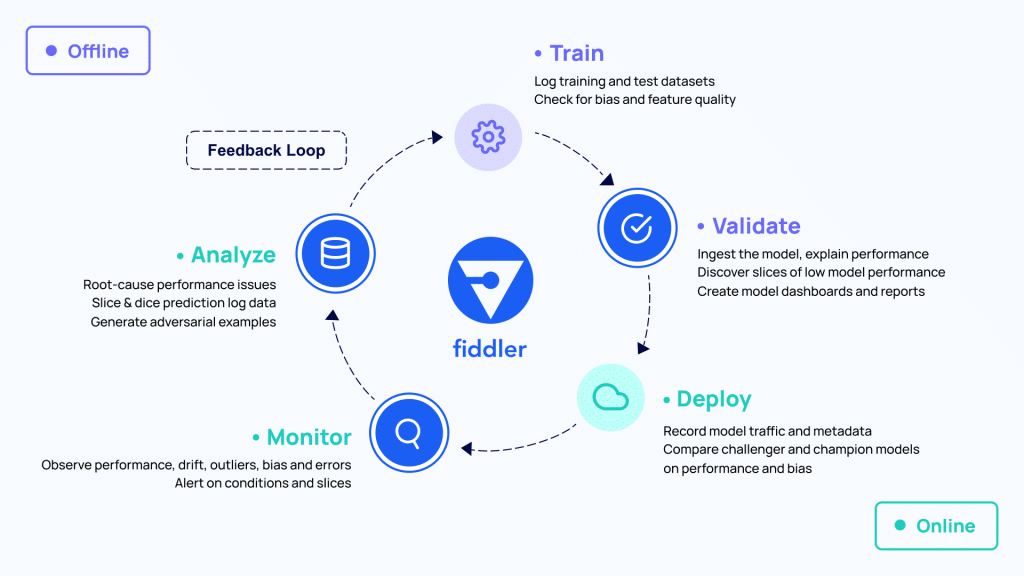

Unhealthy enter knowledge, whether or not it’s attributable to a human or automated course of, is only one factor that organizations should be ready for when implementing AI. Sri recognized 4 major areas of focus: (1) the code, (2) the info, (3) the setting the place the mannequin runs (on-prem, within the cloud, and many others.), and (4) the method of deploying, monitoring, and iterating on the mannequin. Sri summed it up by saying “It’s not mannequin administration anymore—it’s mannequin lifecycle administration.”

At Fiddler, we’ve come to the identical realization. “We began with AI explainability to unlock the black field,” mentioned Krishna, “however then we questioned—at what level ought to we clarify it? Earlier than coaching? Put up-production deployment?” We needed to step again and contemplate the complete lifecycle, from the info to validating and evaluating fashions, and on to deployment and monitoring. What’s wanted within the ML toolkit is a dashboard that sits on the middle of the ML lifecycle and watches all through, monitoring all of the metadata. From working within the business, we’ve seen how frequent silos are: the info science workforce builds a mannequin, ships it over to danger administration, after which a separate MLOps workforce could also be chargeable for productionization. A constant, shared dashboard can convey groups collectively by giving everybody perception into the mannequin.

As Sri defined, when groups are simply “scratching the floor and attempting to know what ML is,” it may be fairly a practical problem to additionally implement AI responsibly. Doing all the pieces in-house in all probability isn’t reasonable for lots of groups. Consultants and exterior validators may also help, and having the best instruments accessible will go a great distance in direction of making Accountable AI accessible—not only for finance, however for each business.