Bias in AI is a matter that has actually come to the forefront in latest months — our latest weblog publish mentioned the Apple Card/Goldman Sachs alleged bias difficulty. And this isn’t an remoted occasion: Racial bias in healthcare algorithms and bias in AI for judicial choices are just some extra examples of rampant and hidden bias in AI algorithms.

Bias in AI is a matter that has actually come to the forefront in latest months — our latest weblog publish mentioned the Apple Card/Goldman Sachs alleged bias difficulty. And this isn’t an remoted occasion: Racial bias in healthcare algorithms and bias in AI for judicial choices are just some extra examples of rampant and hidden bias in AI algorithms.

Whereas AI has had dramatic successes lately, Fiddler Labs was began to handle a problem that’s vital — that of explainability in AI. Complicated AI algorithms as we speak are black-boxes; whereas they will work properly, their interior workings are unknown and unexplainable, which is why we’ve conditions just like the Apple Card/Goldman Sachs controversy. Whereas gender or race won’t be explicitly encoded in these algorithms, there are refined and deep biases that may creep into knowledge that’s fed into these complicated algorithms. It doesn’t matter if the enter components will not be immediately biased themselves — bias can, and is, being inferred by AI algorithms.

Corporations don’t have any proof to point out that the mannequin is, actually, not biased. However, there’s substantial proof in favor of bias primarily based on among the examples we’ve seen from prospects. Complicated AI algorithms are invariably black-boxes and if AI options will not be designed in such a means that there’s a foundational repair, then we’ll proceed to see extra such instances. Think about the biased healthcare algorithm instance above. Even with the intentional exclusion of race, the algorithm was nonetheless behaving in a biased means, probably due to inferred traits.

Prevention is healthier than remedy

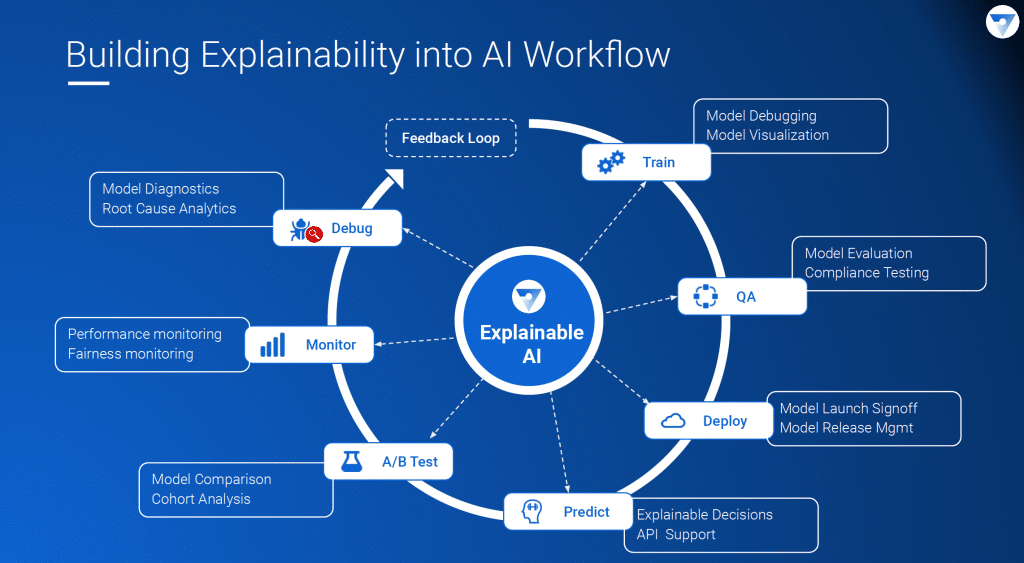

One of many major issues with AI as we speak is that points are detected after-the-fact, normally when individuals have already been impacted by them. This must be modified: explainability must be a basic a part of any AI answer, proper from design all the best way to manufacturing – not simply a part of a autopsy evaluation. To be able to forestall bias we have to have visibility into the interior workings of AI algorithms, in addition to knowledge, all through the lifecycle of AI. And we want humans-in-the-loop monitoring these explainability outcomes and overriding algorithm choices the place obligatory.

What’s explainable AI and the way can it assist?

Explainable AI is one of the best ways to know the why behind your AI. It tells you why a sure prediction was made and offers correlations between inputs and outputs. Proper from the coaching knowledge used to mannequin validation in testing and manufacturing, explainability performs a vital function.

Explainability is vital to AI success

With the latest launch of Google Cloud’s Explainable AI, the dialog round Explainable AI has accelerated. Google’s launch of Explainable AI utterly debunks what we’ve heard lots just lately – that Explainable AI is 2 years out. It demonstrates how corporations want to maneuver quick and undertake Explainability as a part of their machine studying workflows, instantly.

However it begs the query, who must be doing the explaining?

What do companies want with a view to belief the predictions? First, we want explanations so we perceive what’s happening behind the scenes. Then we have to know for a proven fact that these explanations are correct and reliable, and are available from a dependable supply.

At Fiddler, we consider there must be a separation between church and state. If Google is constructing AI algorithms and likewise explains it for patrons -without third occasion involvement – it doesn’t align with the incentives for patrons to utterly belief their AI fashions. Because of this impartiality and impartial third events are essential, as they supply that each one vital impartial opinion to algorithm-generated outcomes. It’s a catch-22 for any firm within the enterprise of constructing AI fashions. Because of this third occasion AI Governance and Explainability providers will not be simply nice-to-haves, however essential for AI’s evolution and use transferring ahead.

Google’s change in stance on Explainability

Google has modified their stance considerably on Explainability. In addition they went forwards and backwards on AI ethics by beginning an ethics board solely to be dissolved in lower than a fortnight. A couple of prime executives at Google during the last couple of years went on report saying that they don’t essentially consider in explaining AI. For instance, right here they point out that ‘..we’d find yourself being in the identical place with machine studying the place we prepare one system to get a solution after which we prepare one other system to say – given the enter of this primary system, now it’s your job to generate an evidence.’ And right here they are saying that ‘Explainable AI received’t ship. It could possibly’t ship the safety you’re hoping for. As a substitute, it offers a very good supply of incomplete inspiration’.

One would possibly marvel about Google’s sudden launch of an Explainable AI service. Maybe they acquired suggestions from prospects or they see this as an rising market. Regardless of the purpose, it is nice that they did actually change their minds and consider within the energy of Explainable AI. We’re all for it.

Our perception at Fiddler Labs

We began Fiddler with the assumption of constructing Explainability into the core of the AI workflow. We consider in a future the place Explanations not solely present a lot wanted solutions for companies on how their AI algorithms work, but in addition assist guarantee they’re launching moral and honest algorithms for his or her customers. Our work with prospects is bearing fruit as we go alongside this journey.

Lastly, we consider that Ethics performs an enormous function in explainability as a result of finally the objective of explainability is to make sure that corporations are constructing moral and accountable AI. For explainability to achieve its final objective of moral AI, we want an agnostic and impartial method, and that is what we’re engaged on at Fiddler.

Based in October 2018, Fiddler’s mission is to allow companies of all sizes to unlock the AI black field and ship reliable AI experiences for his or her prospects. Fiddler’s next-generation Explainable AI Engine allows knowledge science, product and enterprise customers to know, analyze, validate, and handle their AI options, offering clear and dependable experiences to their finish customers. Our prospects embrace pioneering Fortune 500 corporations in addition to rising tech corporations. For extra data please go to www.fiddler.ai or observe us on Twitter @fiddlerlabs.