“

On synthetic intelligence, belief is a should, not a pleasant to have. With these landmark guidelines, the EU is spearheading the event of recent world norms to ensure A.I. could be trusted.”– Margrethe Vestager, European Fee

Fiddler was shaped with the mission to deliver belief to AI. Given the frequency of reports tales protecting instances of algorithmic bias, the necessity for reliable AI has by no means been extra vital. The EU has been main know-how regulation globally because the historic introduction of GDPR that paved the best way for privateness regulation. With AI’s adoption reaching important mass and its dangers turning into obvious, the EU has proposed what would be the first complete regulation to manipulate AI. It supplies insights into how the EU is trying to regulate the use and improvement of AI to make sure the belief of EU residents. This submit summarizes the important thing factors within the regulation and focuses on how groups ought to take into consideration its applicability to their machine studying (ML) practices.

(A full copy of the proposal could be discovered right here.)

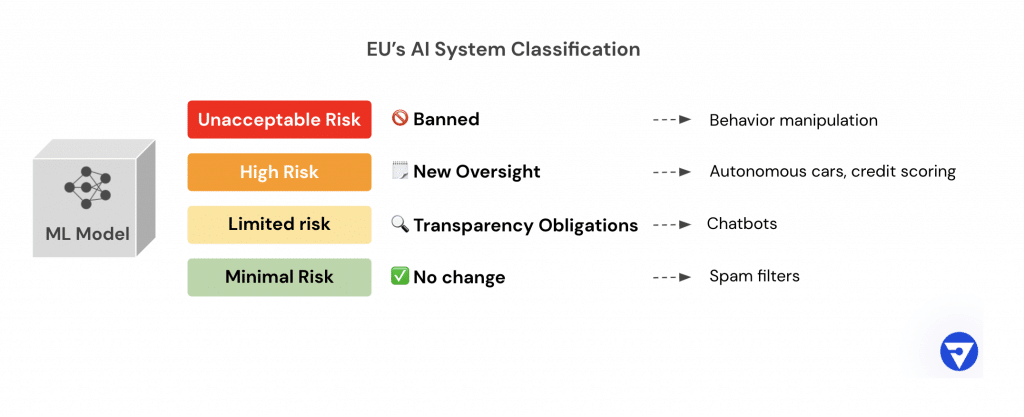

With the introduction of this “GDPR of AI” the EU is trying to encourage and implement the event of human-centric, inclusive, and reliable AI. The coverage recommends utilizing a risk-based method to categorise AI functions:

- Unacceptable threat functions like habits manipulation are banned

- Excessive threat functions like autonomous driving and credit score scoring may have new oversight

- Restricted threat functions like chatbots have transparency obligations

- Minimal threat functions like spam filters, which type a majority of AI functions, haven’t any proposed interventions

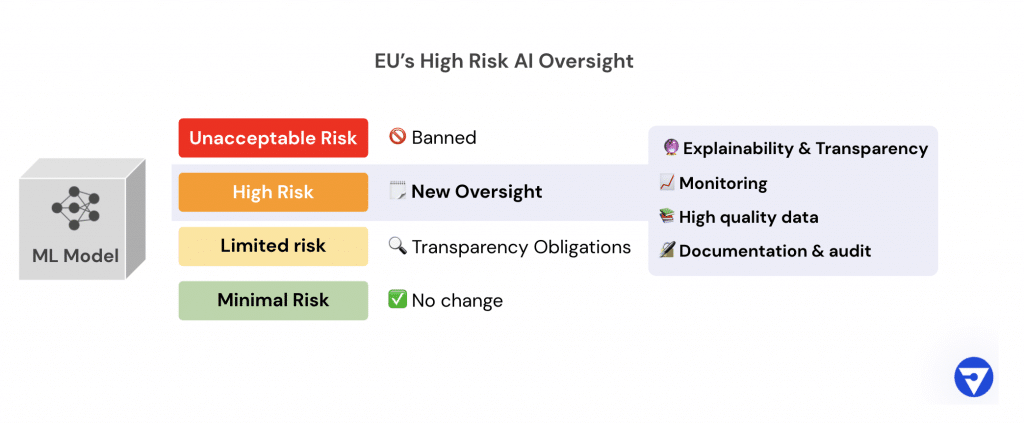

Let’s concentrate on the proposed pointers for top threat functions. The regulation classifies excessive threat AI as one that might endanger individuals (particularly EU residents) and their alternatives, property, society, important providers, or basic rights. Purposes that fall below this classification embody recruitment, creditworthiness, self-driving vehicles, and distant surgical procedure, amongst others.

For these techniques, the regulation stipulates necessities round high-quality information units, system documentation, transparency, and human oversight, together with operational visibility into robustness, accuracy, and safety. The EU additionally encourages the identical stipulations for decrease threat AI techniques.

Let’s dig deeper into three important matters inside the pointers for top threat AI techniques.

1) Transparency

“Customers ought to be capable to perceive and management how the AI system outputs are produced.”

With the transparency mandate, the EU is trying to deal with AI’s “black field” downside. In different phrases, most fashions are usually not presently clear, on account of two traits of ML:

- Not like different algorithmic and statistical fashions which are designed by people, ML fashions are skilled on information robotically by algorithms.

- On account of this automated technology, ML fashions can soak up advanced nonlinear interactions from the information that people can not in any other case discern.

This complexity obscures how a mannequin converts enter to output thereby inflicting a belief and transparency downside. Mannequin complexity will get worse for contemporary deep studying fashions, making them much more tough to elucidate and motive about.

As well as, an ML mannequin can even soak up, add or amplify bias from the information it was skilled on. With no good understanding of mannequin habits, practitioners can not make sure the mannequin is being honest, particularly in high-risk functions that affect individuals’s alternatives.

2) Monitoring

“Excessive threat AI techniques ought to carry out constantly all through their lifecycle and meet a excessive degree of accuracy, robustness, and safety. Additionally in gentle of the probabilistic nature of sure AI techniques’ outputs, the extent of accuracy must be applicable to the system’s supposed objective and the AI system ought to point out to customers when the declared degree of accuracy isn’t met in order that applicable measures could be taken by the latter.”

ML fashions are distinctive software program entities, as in comparison with conventional code, in that they’re probabilistic in nature. They’re skilled for top efficiency on repeatable duties utilizing historic examples. Because of this, their efficiency can fluctuate and degrade over time on account of adjustments within the mannequin enter after deployment. Relying on the affect of a excessive threat AI utility, a shift in its predictive energy may have a big consequence on the use case, e.g. an ML mannequin for recruiting that was skilled on a excessive proportion of employed candidates will degrade if the real-world information begins to comprise a excessive proportion of unemployed candidates, say within the aftermath of a recession. It might probably additionally result in the mannequin making biased selections.

Monitoring these techniques allows steady operational visibility to make sure their habits doesn’t drift from the intention of the mannequin builders and trigger unintended penalties.

3) File-keeping

“Excessive threat AI techniques shall be designed and developed with capabilities enabling the automated recording of occasions (‘logs’) whereas the excessive threat AI system is working. The logging capabilities shall guarantee a degree of traceability of the AI system’s functioning all through its lifecycle that’s applicable to the supposed objective of the system.”

For the reason that ML fashions and information behind AI techniques are continually altering, any operational use case will now require mannequin habits to be constantly recorded. This necessitates logging all of the mannequin inferences to permit them to be replayed and defined at a future time, permitting for auditing and remediation.

Influence to MLOps

This regulation continues to be within the proposal stage, and identical to GDPR, it’ll doubtless move by way of a number of iterations in its journey in direction of approval by the EU. As soon as accredited, there’s sometimes an outlined time frame earlier than the regulation goes into impact to offer corporations time to undertake the brand new guidelines. For instance, whereas GDPR was adopted in April 2016, it turned enforceable in Could 2018.

Enterprise groups accountable for ML Operations or MLOps will subsequently want to organize for course of and tooling updates as they incorporate these pointers into their improvement. Whereas the EU supplies oversight for top threat AI functions, they encourage the identical pointers for decrease threat functions as effectively. This might simplify the ML improvement course of for groups in that each one ML fashions can internally observe the identical pointers.

Though the enforcement particulars will grow to be clearer as soon as the regulation passes, we will infer what adjustments are wanted within the areas of mannequin understanding, monitoring, and audit from greatest practices at the moment. The excellent news is that adopting these pointers is not going to solely assist in constructing AI responsibly but additionally preserve ML fashions working at excessive efficiency.

To make sure compliance with the mannequin understanding pointers of the proposal, groups ought to undertake tooling that gives insights into mannequin habits to all of the stakeholders of AI, not simply the mannequin builders. These instruments have to work for inside collaborators with totally different ranges of technical understanding and in addition permit for mannequin explanations to be surfaced accurately to the top consumer.

- Explanations are a important device in reaching belief with AI. Context is essential in understanding and explaining fashions, and these explanations have to adapt to work throughout mannequin improvement groups, inside enterprise stakeholders, regulators and auditors, and finish customers. For instance, whereas an evidence given to a technical mannequin developer can be much more detailed than one given to a compliance officer, an evidence given to the top consumer can be easy and actionable. Within the case of mortgage denial, the applicant ought to be capable to perceive how the choice was made and look at options on actions to take for rising their probabilities of mortgage approval.

- Mannequin builders have to moreover assess whether or not the mannequin will behave accurately when confronted with real-word information. This requires deeper mannequin evaluation instruments to probe the mannequin for advanced interactions to unlock any threat.

- Bias detection wants separate course of and tooling to supply visibility into mannequin discrimination on protected attributes which might sometimes be area dependent.

Explainable AI, a latest analysis development, is the know-how that unlocks the AI black field so people can perceive what’s happening inside AI fashions to make sure AI-driven selections are clear, accountable, and reliable. This explainability powers a deep understanding of mannequin habits. Enterprises have to have Explainable AI options in place to permit their groups to debug, and supply transparency round a variety of fashions.

To deal with the visibility, degradation, and bias challenges for deployed fashions, groups want to make sure they’ve monitoring in place for ML fashions. The monitoring techniques permit the deployment groups to evaluate that mannequin habits isn’t drifting and inflicting unintended penalties. These techniques sometimes present alerting choices to react to instant operational points. Since ML is an iterative course of that creates a number of improved variations of the mannequin, monitoring techniques ought to present comparative capabilities so builders can swap fashions with full behavioral visibility.

For the document maintaining facets, groups want to make sure they document all or a minimum of some pattern the predictions of the mannequin to allow them to replay it for troubleshooting later.

Broader affect

The proposed regulation is relevant to all suppliers deploying AI techniques within the EU, whether or not they’re based mostly within the EU or a 3rd occasion nation. As groups scale their ML improvement, their processes might want to present a sturdy assortment of validation and monitoring instruments to raised equip mannequin builders and IT for operational visibility.

It’s clear that MLOps groups have to discover methods to bolster their ML improvement with up to date processes and instruments to herald transparency throughout mannequin understanding, robustness, and equity so they’re higher ready for upcoming pointers. If you want to get began rapidly, learn extra about our Mannequin Efficiency Administration framework that explains find out how to put structured observability into your MLOps.