What’s that Buzz?

When you haven’t heard about ChatGPT, you should be hiding below a really massive rock. The viral chatbot, used for pure language processing duties like textual content era, is hitting the information in all places. OpenAI, the corporate behind it, was not too long ago in talks to get a valuation of $29 billion¹ and Microsoft could quickly make investments one other $10 billion².

ChatGPT is an autoregressive language mannequin that makes use of deep studying to provide textual content. It has amazed customers by its detailed solutions throughout quite a lot of domains. Its solutions are so convincing that it may be troublesome to inform whether or not or not they had been written by a human. Constructed on OpenAI’s GPT-3 household of enormous language fashions (LLMs), ChatGPT was launched on November 30, 2022. It is likely one of the largest LLMs and may write eloquent essays and poems, produce usable code, and generate charts and web sites from textual content description, all with restricted to no supervision. ChatGPT’s solutions are so good, it’s exhibiting itself to be a possible rival to the ever present Google search engine³.

Massive language fashions are … effectively… massive. They’re skilled on monumental quantities of textual content knowledge which may be on the order of petabytes and have billions of parameters. The ensuing multi-layer neural networks are sometimes a number of terabytes in dimension. The hype and media consideration surrounding ChatGPT and different LLMs is comprehensible — they’re certainly exceptional developments of human ingenuity, generally shocking the builders of those fashions with emergent behaviors. For instance, GPT-3’s solutions are improved by utilizing the sure ‘magic’ phrases like “Let’s assume step-by-step” initially of a prompt⁴. These emergent behaviors level to their mannequin’s unbelievable complexity mixed with a present lack of explainability, have even made builders ponder whether or not the fashions are sentient⁵.

The Spectre of Massive Language Fashions

With all of the optimistic buzz and hype, there was a smaller, forceful refrain of warnings from these throughout the accountable AI neighborhood. Notably in 2021, Timit Gebru, a outstanding researcher engaged on accountable AI, printed a paper⁶ that warned of the numerous moral points associated to LLMs which led to her be fired from Google. These warnings span a variety of issues⁷: lack of interpretability, plagiarism, privateness, bias, mannequin robustness, and their environmental impression. Let’s dive just a little into every of those matters.

Belief and Lack of Interpretability:

Deep studying fashions, and LLMs specifically, have develop into so massive and opaque that even the mannequin builders are sometimes unable to know why their fashions are ensuring predictions. This lack of interpretability is a big concern, particularly in settings the place customers wish to know why and the way a mannequin generated a selected output.

In a lighter vein, our CEO, Krishna Gade, used ChatGPT to create a poem⁸ on explainable AI within the fashion of John Keats, and, frankly, I feel it turned out fairly effectively.

Krishna rightfully identified that the transparency round how the mannequin arrived at this output is missing. For items of labor produced by LLMs, the dearth of transparency round which sources of knowledge the output is drawing on implies that the solutions offered by ChatGPT are not possible to correctly cite and due to this fact not possible for customers to validate or belief its output9. This has led to bans of ChatGPT-created solutions on boards like Stack Overflow¹⁰.

Transparency and an understanding of how a mannequin arrived at its output turns into particularly necessary when utilizing one thing like OpenAI’s Embedding Model¹¹, which inherently comprises a layer of obscurity, or in different instances the place fashions are used for high-stakes choices. For instance, if somebody had been to make use of ChatGPT to get first support directions, customers have to know the response is dependable, correct, and derived from reliable sources. Whereas varied post-hoc strategies to elucidate a mannequin’s selections exist, these explanations are sometimes ignored when a mannequin is deployed.

The ramifications of such an absence of transparency and trustworthiness are notably troubling within the period of faux information and misinformation, the place LLMs might be fine-tuned to unfold misinformation and threaten political stability. Whereas Open AI is engaged on varied approaches to determine its mannequin’s output and plans to embed cryptographic tags to watermark the outputs¹², these options can’t come quick sufficient and could also be inadequate.

This results in points round…

Plagiarism:

Problem in tracing the origin of a wonderfully crafted ChatGPT essay naturally results in conversations on plagiarism. However is that this actually an issue? This writer doesn’t assume so. Earlier than the arrival of ChatGPT, college students already had entry to providers that will write essays for them¹³, and there has all the time been a small proportion of scholars who’re decided to cheat. However hand-wringing over ChatGPT’s potential to show all of our kids into senseless, plagiarizing cheats has been on the highest of many educators’ minds and has led some college districts to ban using ChatGPT¹⁴.

Conversations on the potential of plagiarism detract from the bigger and extra necessary moral points associated to LLMs. Provided that there was a lot buzz on this subject, I’d be remiss to not point out it.

Privateness:

Massive language fashions are in danger for knowledge privateness breaches if they’re used to deal with delicate knowledge. Coaching units are drawn from a spread of knowledge, at occasions together with personally identifiable information¹⁵ — names, e mail addresses¹⁶, cellphone numbers, addresses, medical data – and due to this fact, could also be within the mannequin’s output. Whereas this is a matter with any mannequin skilled on delicate knowledge, given how massive coaching units are for LLMs, this drawback may impression many individuals.

Baked in Bias:

As beforehand talked about, these fashions are skilled on big corpuses of knowledge. When knowledge coaching units are so massive, they develop into very troublesome to audit and are due to this fact inherently dangerous5. This knowledge comprises societal and historic biases¹⁷ and thus any mannequin skilled on it’s prone to reproduce these biases if safeguards should not put in place. Many standard language fashions had been discovered to comprise biases which may end up in will increase within the dissemination of prejudiced concepts and perpetuate hurt in opposition to sure teams. GPT-3 has been proven to exhibit frequent gender stereotypes¹⁸, associating ladies with household and look and describing them as much less highly effective than male characters. Sadly, it additionally associates Muslims with violence¹⁹, the place two-thirds of responses to a immediate containing the phrase “Muslim” contained references to violence. It’s seemingly that much more biased associations exist and have but to be uncovered.

Notably, Microsoft’s chatbot rapidly grew to become a parrot of the worst web trolls in 2016²⁰, spewing racist, sexist, and different abusive language. Whereas ChatGPT has a filter to aim to keep away from the worst of this sort of language, it will not be foolproof. OpenAI pays for human labelers to flag essentially the most abusive and disturbing items of knowledge, however the firm they contract with has confronted criticism for less than paying their employees $2 per day and the employees report affected by deep psychological harm²¹.

Mannequin Robustness and Safety:

Since LLMs come pre-trained and are subsequently tremendous tuned to particular duties, they create various points and safety dangers. Notably, LLMs lack the flexibility to offer uncertainty estimates²². With out figuring out the diploma of confidence (or uncertainty) of the mannequin, it’s troublesome for us to determine when to belief the mannequin’s output and when to take it with a grain of salt²³. This impacts their potential to carry out effectively when fine-tuned to new duties and to keep away from overfitting. Interpretable uncertainty estimates have the potential to enhance the robustness of mannequin predictions.

Mannequin safety is a looming problem attributable to an LLM’s mother or father mannequin’s generality earlier than the tremendous tuning step. Subsequently, a mannequin could develop into a single level of failure and a first-rate goal for assaults that may have an effect on any purposes derived from the mannequin of origin. Moreover, with the dearth of supervised coaching, LLMs may be susceptible to knowledge poisoning²⁵ which may result in the injection of hateful speech to focus on a particular firm, group or particular person.

LLM’s coaching corpuses are created by crawling the web for quite a lot of language and topic sources, nonetheless they’re solely a mirrored image of the people who find themselves most certainly to have entry and often use the web. Subsequently, AI-generated language is homogenized and sometimes displays the practices of the wealthiest communities and countries⁶. LLMs utilized to languages not within the coaching knowledge usually tend to fail and extra analysis is required on addressing points round out-of-distribution knowledge.

Environmental Affect and Sustainability:

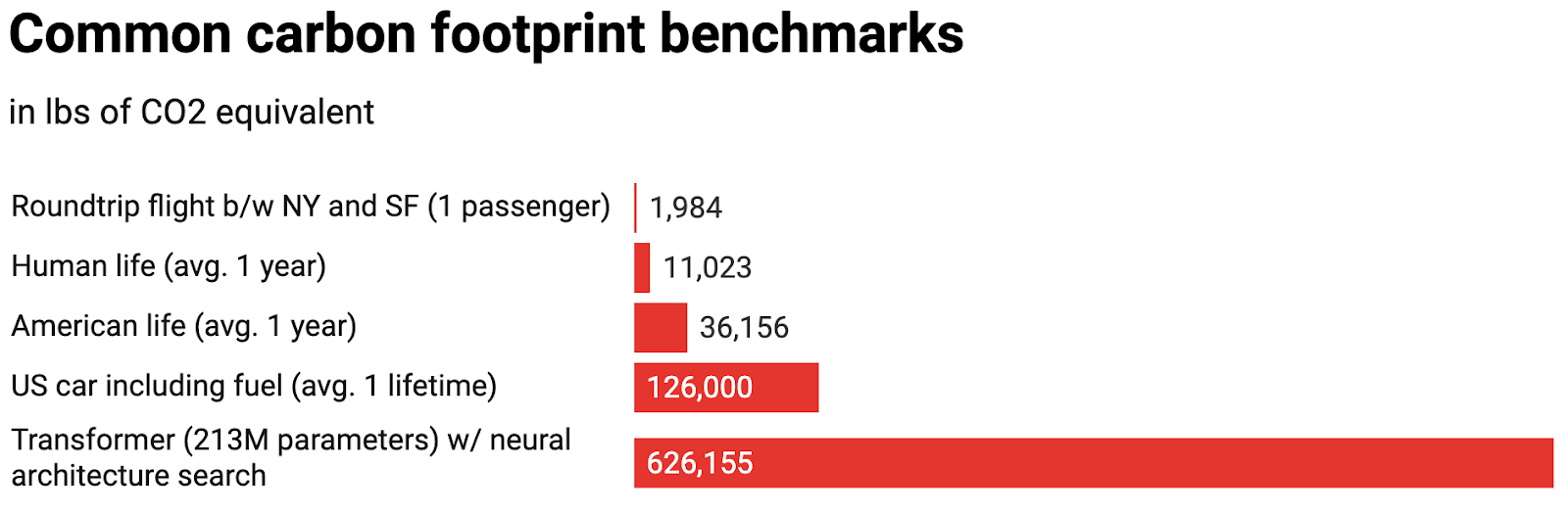

A 2019 paper by Strubell and collaborators outlined the big carbon footprint of the coaching lifecycle of an LLM24,26, the place coaching a neural structure search primarily based mannequin with 213 million parameters was estimated to provide greater than 5 occasions the lifetime carbon emissions from the typical automotive. Remembering that GPT-3 has 175 billion parameters, and the subsequent era GPT-4 is rumored to have 100 trillion parameters, this is a vital facet in a world that’s dealing with the growing horrors and devastation of a altering local weather.

Chart: MIT Know-how Overview Supply: Strubell et al.

Chart: MIT Know-how Overview Supply: Strubell et al.

What Now?

Any new expertise will deliver benefits and downsides. I’ve given an outline of lots of the points associated to LLMs, however I wish to stress that I’m additionally excited by the brand new potentialities and the promise these fashions maintain for every of us. It’s society’s accountability to place within the correct safeguards and use this new tech properly. Any mannequin used on the general public or let into the general public area must be monitored, defined, and often audited for mannequin bias. Partially 2 of this weblog collection, I’ll define suggestions for AI/ML practitioners, enterprises, and authorities companies on the way to handle among the points specific to LLMs.

——