We frequently take into account the advantages of AI and machine studying, however what in regards to the potential for hurt? As extra elements of our lives have moved on-line as a result of COVID-19 pandemic, the affect of AI techniques is quickly accelerating. It’s extra vital than ever to make sure that AI is used ethically and responsibly.

We frequently take into account the advantages of AI and machine studying, however what in regards to the potential for hurt? As extra elements of our lives have moved on-line as a result of COVID-19 pandemic, the affect of AI techniques is quickly accelerating. It’s extra vital than ever to make sure that AI is used ethically and responsibly.

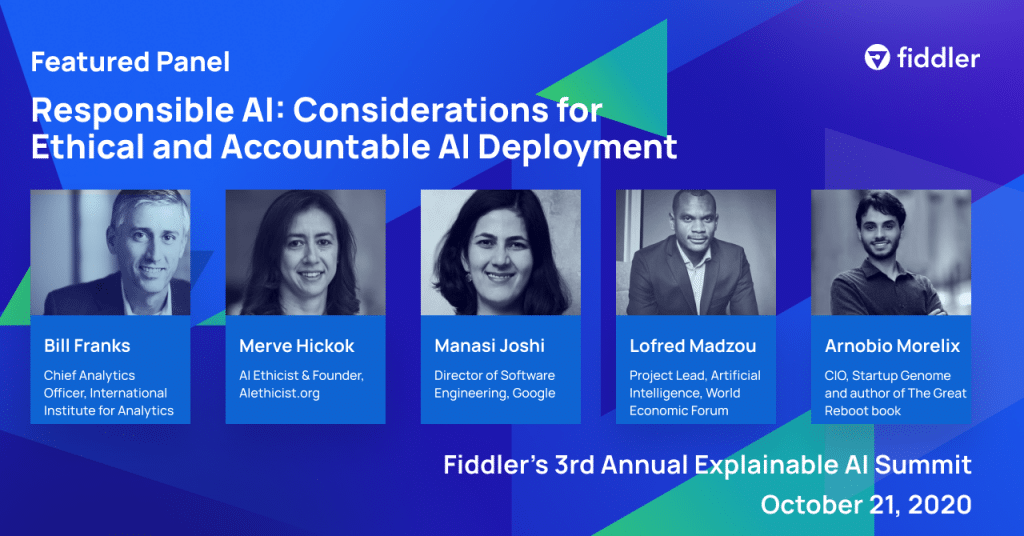

As a part of Fiddler’s third Annual Explainable AI Summit in October 2020, we introduced collectively a various panel of specialists in accountable AI. They shared what they’ve discovered from working with among the world’s largest companies and governments to create and uphold requirements for implementing AI techniques. On this article, we’ll stroll via among the key factors from our dialog; you may as well watch the full-length video of our dialogue right here.

What’s accountable AI?

“Accountable AI” refers back to the accountable and moral use of AI. That’s, after we’re speaking about accountable AI, we’re referring to selections being made responsibly by people concerned within the design and implementation of AI techniques. Ethics come into play as a result of they’re the guiding ideas that assist an organization, or a whole society, determine on what it means to behave for the better good (which typically may imply not utilizing AI to resolve a sure downside, if the answer might trigger hurt).

Why it issues

Accountable AI is crucial on the subject of techniques making automated selections that may have an effect on an individual’s well being, well-being, or entry to sources and alternatives. We might lengthen this to incorporate any impression on the surroundings and local weather change as effectively. In 97 Issues About Ethics Everybody in Information Science Ought to Know, panelist Invoice Franks has written about potential pitfalls. One of essentially the most thought-provoking challenges? Monitoring autonomous weapons.

Use instances for accountable AI will be present in all walks of life. Think about the thought of utilizing AI techniques in schooling for admission right into a college, or for resume screening to attach job-seekers with recruiters, or in healthcare. Not all of those domains essentially have consultant information or ground-truth labels. This makes accountable AI much more vital, since if you end up generalizing based mostly on a small slice of the inhabitants, it’s going to be onerous to keep away from bias and obtain equitable outcomes.

Implementing accountable AI

Implementing accountable AI is about guaranteeing that the habits of your AI system is in step with the necessities that you’ve got outlined. How will you succeed at this deceptively easy activity? Listed here are some frameworks our panelists shared for placing accountable AI into follow.

5 Key Rules

- Reliability: How do mannequin predictions change in several contexts? How generalizable is the mannequin?

- Equity: By utilizing this mannequin, are we ensuring we aren’t creating or reinforcing bias?

- Interpretability: Do we all know why the mannequin made its predictions? Can we generate diagnostics that can provide transparency to a large viewers: information scientists, governments, and extraordinary folks affected by the mannequin’s outputs?

- Privateness: Have we made positive that the information for our mannequin respects person privateness and complies with all legal guidelines and rules?

- Safety: Is our mannequin secure from assaults that may poison the information? Are we ensuring the mannequin doesn’t leak delicate info?

Operational Procedures

It will also be helpful to establish the steps that your group can take to operationalize its ideas round accountable AI. These may appear like:

Step 1: Doc the specified habits of the AI techniques and the bigger product that they match into (for instance, a face-detection system for airports). What are the important thing efficiency indicators (KPIs) that can present whether or not the product is behaving as desired?

Step 2: Report the precise habits whereas the system is working. Numerous the work right here will contain translating the system’s selections and the context during which these selections have been made into language that each one stakeholders can perceive (together with end-users).

Step 3: Proactively search for what might go improper. Organizations are used to taking a reactive method to points. However for accountable AI, the place rules are nonetheless rising, it’s crucial to actively establish all of the potential areas of failure.

Step 4: Put an inner auditing and reporting course of in place, and guarantee that these stories will be accessed by all of your stakeholders, together with end-users and clients.

Our panelists emphasised that the ultimate step, transparency, is crucial. When a company just like the World Financial Fund implements moral AI, they’re reimagining regulation, working with governments and regulators to create new insurance policies and frameworks.

Cultural Adjustments

Above all, accountable AI is barely doable you probably have a tradition that helps it. As AI ethicist Merve Hickok defined, quoting Peter Drucker, “Tradition eats technique for breakfast.” The intention to have moral AI wants buy-in throughout the corporate, from C-level executives to the builders implementing the fashions. We additionally must have higher cross-functional alignment, and provides danger and compliance specialists extra instruments that assist them talk with information scientists (and vice versa).

Implementing AI ethically is a problem that companies and governments will proceed to grapple with, particularly because the tempo of AI implementation accelerates. Panelist Arnobio Morelix is writing a ebook, The Nice Reboot, about shifts that can occur in a post-pandemic world. If we are able to use this second to push for constructive cultural adjustments and implement sensible options, maybe there’ll quickly come a day after we don’t have to clarify what “accountable AI” means — as a result of this time period will already be a part of the mainstream.

This text was based mostly on a dialog that introduced collectively panelists from monetary establishments, as a part of Fiddler’s third annual Explainable AI Summit on October 21, 2020. You may view the recorded dialog right here.

Panelists:

Arnobio Morelix, Analysis & Information Science Chief

Manasi Joshi, Director of Software program Engineering, Google

Merve Hickok, AI Ethicist & Founder, AIethicist.org

Invoice Franks, Chief Analytics Officer, Worldwide Institute for Analytics

Lofred Madzou, Challenge Lead, Synthetic Intelligence, World Financial Discussion board

Moderated by Anusha Sethuraman, Head of Advertising and marketing, Fiddler